Announcing Software Update 7.5!

Caching Enhancements & RTR Beta is now G/A

Our first release of 2014 is now available. This release contains two key features: 1) NCO (caching) module enhancements, and 2) our 7.4 Dynamic Real-Time Reporting (RTR) Beta is now Generally Available.

Caching Enhancements

In order to support better YouTube hit ratios for our NetEqualizer Caching Option (NCO), we have invested in technology that keeps up with the changing nature of how YouTube is delivered. YouTube URLs actually appear like dynamic content most of the time, even if it is the same video you are watching from the day before. One of the basic covenants of a caching engine is to NOT cache dynamic content. In this case of YouTube videos, we have built out logic to cache YouTube, as it is not really dynamic content, just dynamic addressing.

For this release, we consulted with some of the top caching engineers in the world to ensure that we are evolving our caching engine to keep up with the latest addressing schemes. This required a change to our caching logic and some extensive testing in our labs.

It is now economically feasible to make a jump to a 1TB SSD drive. As of 7.5, we have now increased our SSD drive size from 256GB to 1TB. All new caching customers will be shipped the 1TB SSD. For existing NCO customers, if you would like to upgrade your drive size, please contact us for pricing.

New Reporting Features

Our Real-Time Reporting Tool (RTR) Beta version is now Generally Available! We had some great feedback over the last couple of months and are very happy with the way it turned out. Thanks to everyone who participated in our Beta!

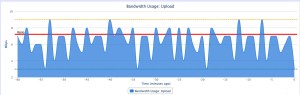

The new reporting features built into RTR allow for traffic reporting functionality similar to what you get from ntop. You can see overall traffic patterns from an historic point of view, and you can also drill down to see traffic patterns for specific IP addresses you want to track.

In addition, we added in the ability to show all rules associated with an IP address for easy trouble shooting. You can now see if a specific IP address is a member of a pool, has an associated hard limit, has priority, or has a connection limit.

Check out our Software Update 7.5 Release Notes for more details on what Software Update 7.5 includes.

These features will be free to customers with valid NetEqualizer Software and Support who are running version 7.0+ (NCO features will require NCO). If you are not current with NSS, contact us today!

sales@apconnections.net

-or-

303-997-1300

2014 NetEqualizer Pricing Preview

As we begin a new year, we are releasing our 2014 Price List for NetEqualizer, which will be effective February 1st, 2014.

Of note this year is that we have added back a 10Mbps license level to our NE3000 series.

We also continue to offer license upgrades on our NE2000 series. Remember that if you have a NE2000 purchased on or after August 2011, it will be supported past 12/31/2014. If you have an older NE2000, please contact us to discuss your options.

All Newsletter readers can get an advance peek here! For a limited time, the 2014 Price List can be viewed here without registration. You can also view the Data Sheets for each model once in the 2014 Price List.

Current quotes will not be affected by the pricing updates, and will be honored for 90 days from the date the quote was originally given.

If you have questions on pricing, feel free to contact us at:

sales@apconnections.net

-or-

303-997-1300

NCO Customers Will Soon Have Access to a Full Movie Library!

One of the things we had on our docket to work on this winter and spring was to expand our caching offering (NCO) to include Netflix.

In our due diligence we consulted with the Netflix Open Connect team (their caching engine), and discovered that they just don’t have the resources to support ISP’s with less than a 5 Gbps Netflix stream. Thus, we could not bundle their caching engine into our NCO offer – it is just too massive in scope.

Streaming long-form video content on the Internet cannot be accomplished reliably without a caching engine. It doesn’t matter how big your pipe is, you need to have a chunk of content stored locally to even have a chance to meet the potential demand – if you make any promises of consistent video content. This is why Netflix has spent millions of dollars providing caching servers to the largest commercial providers. Even with commercial providers’ big pipes to the backbone, they need to host Netflix content on their regional networks.

So what can we do to help our customers offer reliable streaming video content?

1) We would have to load up a caching server with content locally.

2) We would have to continually update it with new and interesting material.

3) We would need to take care of licensing desirable content.

The licensing part is the key to all this. It is not easy with some of the politics in the film industry, but after reaching out to some contacts over the last couple of weeks, it actually is very doable, due to the increase in independent distributors looking for channels.

Did you know that NetEqualizer servers sit in front of roughly 5,000,000 end users? This is sort of a “perfect storm” come to fruition. We have thousands of potential caching servers and a channel in place to serve a set of customers that currently do not have access to online streaming full length movie content. A customer running NCO would be able to choose between a Pay-Per-View (PPV) model and an unlimited content (UC) option.

The details and mechanics of these two options will be outlined in detail in our February Newsletter. In the meantime, please let us know your thoughts on how this offering would work best for your organization, and get on board with NCO to get the ball rolling!

To learn more about NCO, please read our Caching Executive White Paper.

If you have questions, contact us at:

Coming Soon: Get Website Category Data from NCO

Along with our other enhancements to NCO, another feature we’ll be rolling out soon with our NetEqualizer Caching Option (NCO) is the ability to gather website category data for sites visited by your users.

This data can not only be used to tune your NetEqualizer, but will help in enforcing usage policies and other requirements.

To learn more about NCO, please read our Caching Executive White Paper.

If you are interested in NCO or have questions about this feature, contact us at:

Best Of The Blog

Top 10 Out-of-the-Box Technology Predictions for 2014

By Art Reisman – CTO – APconnections

Back in 2011, I posted some technology predictions for 2012. Below is my revised and updated list for 2014.

1) Look for Google, or somebody, to launch an Internet Service using a balloon off the California Coast.

Well it turns out, those barges out in San Francisco Bay are for something far less ambitious than a balloon-based Internet service, but I still think this is on horizon so I am sticking with it.

2) Larger, slower transport planes to bring down the cost of comfortable international and long range travel.

I did some analysis on the cost of airline operators, and the largest percentage of the cost in air travel is fuel. You can greatly reduce fuel consumption per mile by flying larger, lighter aircraft at slower speeds. Think of these future airships like cruise ships. They will have more comforts than a the typical packed cross-continental flight of today. My guess is, given the choice, passengers will trade off speed for a little price break and more leg room…

Top 10 Out-of-the-Box Technology Predictions for 2014

January 3, 2014 — netequalizerI did some analysis on the cost of airline operators, and the largest percentage of the cost in air travel is fuel. You can greatly reduce fuel consumption per mile by flying larger, lighter aircraft at slower speeds. Think of these future airships like cruise ships. They will have more comforts than a the typical packed cross continental flight of today. My guess is, given the choice, passengers will trade off speed for a little price break and more leg room.

3) I am still calling for somebody to make a smart contextual search engine with a brain that weeds through the muck of bad useless commercial content to give you a decent result. It seems every year, intentional or not, Google is muddling their search results into the commercial. It is like the travel magazine that claims the editorial and advertising units are not related, some how the flow of money overrides good intentions. Google is very vulnerable to a mass exodus should somebody pull off a better search engine. Perhaps this search engine would allow the user to filter results from less commercial to more commercial sites?

4) Drones ? Sc#$$ drones, Amazon is not ever going to deliver any consumer package with a drone service. This PR stunt was sucked up by the Media. Yes there will be many uses for unmanned aircraft but not residential delivery

5) Somebody is going to genetically engineer an ant colony to do something useful. Something simple, like fill in pot holes in streets with little pebbles. The Ants in Colorado already pile up mounds of pebbles around their colonies, just got to get them put them in the right place

6) Protein Shakes made out of finely powdered exoskeletons of insects. Not possible? Think of all the by product that goes into something like a hot dog and nobody flinches. If you could harvest a small percent of the trillions of grasshoppers in the world dry them and grind them up you would have an organic protein source without any environmental impact or those dreaded GMOs.

7) Look for more drugs that stop cancer at the cell level by turning off genetic markers.

This is my brothers research ongoing at University of Florida.

8) A diet pill that promotes weight loss without any serious side effects

Share this: